Wild Patterns

Half-day Tutorial on Adversarial Machine Learning

Secure ML Research Tutorial: Wild Patterns Secure ML Library

Secure ML Research Tutorial: Wild Patterns Secure ML Library

“If you know the enemy and know yourself, you need not fear the result of a hundred battles”

Sun Tzu, The art of war, 500 BC

Upcoming tutorial editions:

- Next editions will be announced soon

Proposers' names, titles, affiliations, and emails:

- Battista Biggio, IAPR Member, IEEE Senior Member;

- Fabio Roli, IAPR Fellow, IEEE Fellow.

Pluribus One, Italy; and University of Cagliari, Dept. of Electrical and Electronic Engineering, Piazza d’armi 09123 Cagliari, Italy.

Abstract: This tutorial aims to introduce the fundamentals of adversarial machine learning, presenting a well-structured review of recently-proposed techniques to assess the vulnerability of machine-learning algorithms to adversarial attacks (both at training and at test time), and some of the most effective countermeasures proposed to date. We consider these threats in different application domains, including object recognition in images, biometric identity recognition, spam and malware detection.

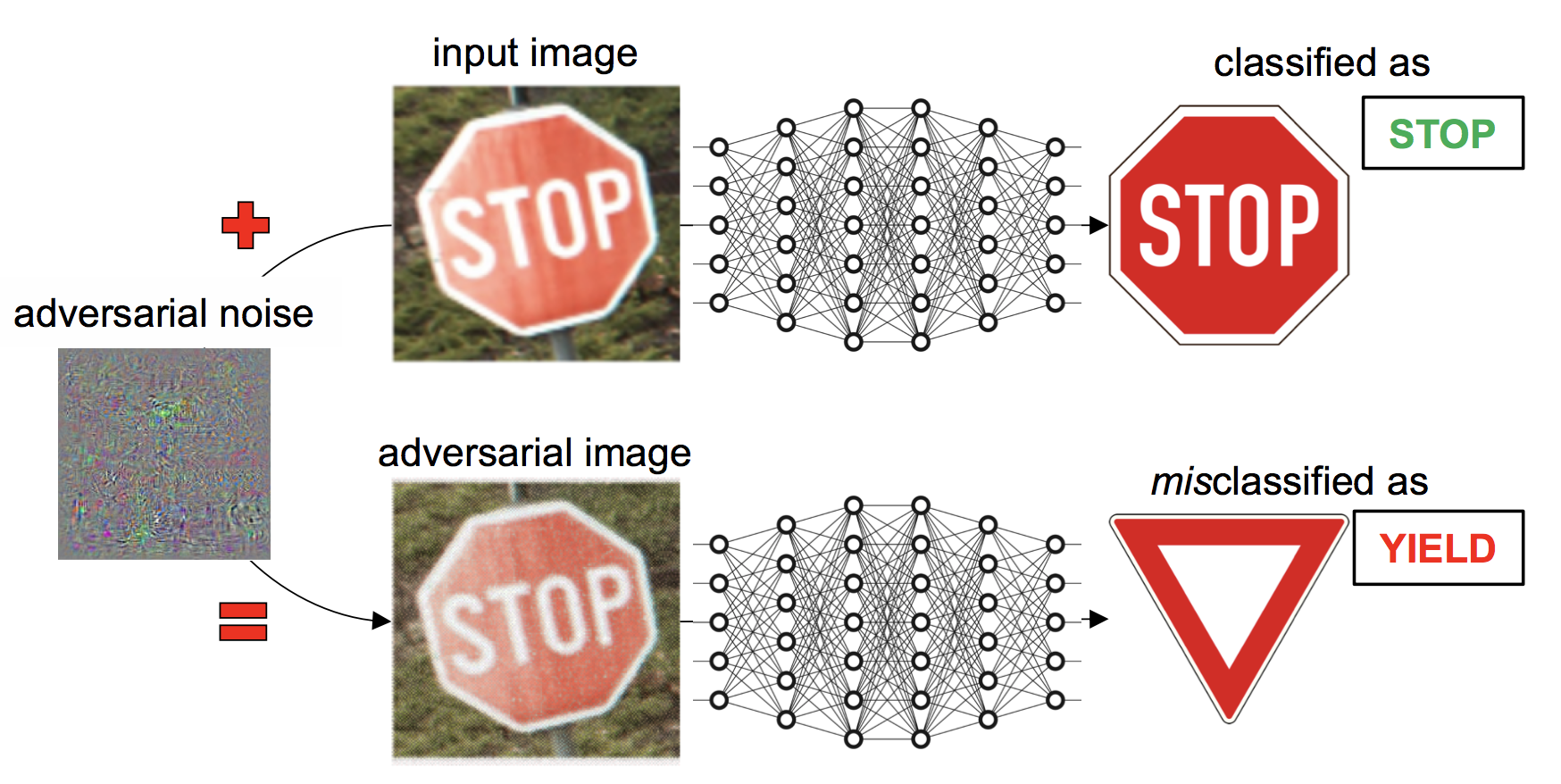

Description of the tutorial: Machine-learning and data-driven AI techniques, including deep networks, are currently used in several applications, ranging from computer vision to cybersecurity. In most of these applications, including spam and malware detection, the learning algorithm has to face intelligent and adaptive attackers who can carefully manipulate data to purposely subvert the learning process. As these algorithms have not been originally designed under such premises, they have been shown to be vulnerable to well-crafted, sophisticated attacks, including test-time evasion and training-time poisoning attacks (also known as adversarial examples). The problem of countering these threats and learning secure classifiers and AI systems in adversarial settings has thus become the subject of an emerging, relevant research field in the area of machine learning and AI safety called adversarial machine learning.

Fig. 1. Example of adversarial manipulation of an input image, initially classified correctly as a stop sign by a deep neural network, to have it misclassified as a yield sign. The adversarial noise is magnified for visibility but remains imperceptible in the resulting adversarial image.

The purposes of this tutorial are:

- to introduce the fundamentals of adversarial machine learning;

- to illustrate the design cycle of machine-learning algorithms for adversarial tasks;

- to present novel, recently-proposed techniques to assess performance of learning algorithms under attack, evaluate their vulnerabilities, and implement defense strategies that improve robustness to attacks; and

- to show some applications of adversarial machine learning for object recognition in images, biometric identity recognition, spam and malware detection.

Point-form outline of the tutorial

- Introduction to adversarial machine learning. Introduction by practical examples from computer vision, biometrics, spam and malware detection. Previous work on adversarial learning and recognition. Basic concepts and terminology. The concept of adversary-aware classifier. Definitions of attack and defense.

- Design of learning-based pattern classifiers in adversarial environments. Modelling adversarial tasks. The two-player model (the attacker and the classifier). Levels of reciprocal knowledge of the two players (perfect knowledge, limited knowledge, knowledge by queries and feedback). The concepts of security by design and security by obscurity.

- System design: vulnerability assessment and defense strategies. Attack models against pattern classifiers. The influence of attacks on the classifier: causative and exploratory attacks. Type of security violation: integrity, availability and privacy attacks. The specificity of the attack: targeted and indiscriminate attacks. Vulnerability assessment by performance evaluation. Taxonomy of possible defense strategies. Examples from computer vision, biometrics, spam and malware detection. Hands-on web demo on adversarial examples, "Deep Learning security".

- Summary and outlook. Current state of this research field and future perspectives.

Description of the target audience of the tutorial:

This tutorial motivates and explains a topic of emerging importance for AI, and it is particularly devoted to:

This tutorial motivates and explains a topic of emerging importance for AI, and it is particularly devoted to:

- people who want to become aware of the new research field of adversarial machine learning and learn the fundamentals;

- people doing research in machine learning, AI safety, and pattern recognition applications which have a potential adversarial component, and wish to learn how the techniques of adversarial classification can be effectively used in such applications.

Further readings

- Biggio, B., Roli, F. Wild Patterns: Ten Years After the Rise of Adversarial Machine Learning. ArXiv, 2017. (our tutorial-related article)

- Barreno, M., Nelson, B., Sears, R., Joseph, A. D., Tygar, J. D. Can machine learning be secure? ASIACCS, 2006.

- Huang, L., Joseph, A. D., Nelson, B., Rubinstein, B., Tygar, J. D. Adversarial machine learning. AISec, 2011.

- Biggio, B., Nelson, B., Laskov, P. Poisoning attacks against SVMs. ICML, 2012.

- Biggio, B., Corona, I., Maiorca, D., Nelson, B., Srndic, N., Laskov, P., Giacinto, G., Roli, F. Evasion attacks against machine learning at test time. ECML-PKDD, 2013.

- Biggio, B., Fumera, G., Roli, F. Security evaluation of pattern classifiers under attack. IEEE Trans. Knowl. Data Eng., 2014.

- Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., Fergus, R. Intriguing properties of neural networks. ICLR, 2014.

- Xiao, H., Biggio, B., Brown, G., Fumera, G., Eckert, C., Roli, F. Is feature selection secure against training data poisoning? ICML, 2015.

- Nguyen, A. M., Yosinski, J., Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. CVPR, 2015.

- Goodfellow, I., Shlens, J., Szegedy, C. Explaining and harnessing adversarial examples. ICLR, 2015.

- Moosavi-Dezfooli, S.-M., Fawzi, A., Frossard, P. Deepfool: a simple and accurate method to fool deep neural networks. CVPR, 2016.

- Papernot, N., McDaniel, P., Jha, S., Fredrikson, M., Celik, Z. B., Swami, A. The limitations of deep learning in adversarial settings. IEEE Euro S&P, 2016.

- Papernot, N., McDaniel, P., Goodfellow, I., Jha, S. Celik, Z. B., Swami, A. Practical black-box attacks against machine learning. ASIACCS, 2017.

- Carlini N., Wagner, D. Towards evaluating the robustness of neural networks. IEEE Symp. SP, 2017.

- Demontis, A., Melis, M., Biggio, B., Maiorca, D., Arp, D., Rieck, K., Corona, I., Giacinto, G., Roli, F. Yes, machine learning can be more secure! a case study on Android malware detection. IEEE Trans. Dependable and Secure Comp., 2017.

- Melis, M., Demontis, A., Biggio, B., Brown, G., Fumera, G., Roli, F. Is deep learning safe for robot vision? Adversarial examples against the iCub humanoid. In ICCV Workshop ViPAR, 2017.

- Athalye, A., Carlini, N., Wagner, D. Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples. ArXiv, 2018.

- Athalye, A., Engstrom, L., Ilyas, A., Kwok, K. Synthesizing robust adversarial examples. ICLR, 2018.

- Jagielski, M., Oprea, A., Biggio, B., Liu, C., Nita-Rotaru, C., Li, B. Manipulating machine learning: Poisoning attacks and countermeasures for regression learning. IEEE Symp. SP, 2018.

Brief resume of the presenters

Battista Biggio is an Assistant Professor at the University of Cagliari, Italy, and the AI Chief Scientist of Pluribus One, a company that he co-founded in 2015. Pluribus One is a spin-off of the Pattern Recognition and Applications laboratory, developing machine-learning technologies for computer vision and security applications. His research over the past ten years has addressed theoretical and methodological issues in the area of machine learning and pattern recognition to help solving real-world application problems in computer vision and security. He has provided significant contributions to the area of adversarial machine learning, playing a leading role in the establishment and advancement of this research field. Battista Biggio has published more than 60 articles on these research subjects, and many of his papers are frequently cited. Over the last years, he has been invited to give several keynote speeches and lectures on adversarial machine learning. His h-index is 24 according to Google Scholar (April 2018), and his papers collected more than 1,850 citations. He is a Senior Member of the IEEE and a Member of the IAPR, Chairman of the IAPR Technical Committee on Statistical Pattern Recognition Techniques, and Associate Editor of Pattern Recognition and of the IEEE Transactions on Neural Networks and Learning Systems.

Battista Biggio is an Assistant Professor at the University of Cagliari, Italy, and the AI Chief Scientist of Pluribus One, a company that he co-founded in 2015. Pluribus One is a spin-off of the Pattern Recognition and Applications laboratory, developing machine-learning technologies for computer vision and security applications. His research over the past ten years has addressed theoretical and methodological issues in the area of machine learning and pattern recognition to help solving real-world application problems in computer vision and security. He has provided significant contributions to the area of adversarial machine learning, playing a leading role in the establishment and advancement of this research field. Battista Biggio has published more than 60 articles on these research subjects, and many of his papers are frequently cited. Over the last years, he has been invited to give several keynote speeches and lectures on adversarial machine learning. His h-index is 24 according to Google Scholar (April 2018), and his papers collected more than 1,850 citations. He is a Senior Member of the IEEE and a Member of the IAPR, Chairman of the IAPR Technical Committee on Statistical Pattern Recognition Techniques, and Associate Editor of Pattern Recognition and of the IEEE Transactions on Neural Networks and Learning Systems. Fabio Roli is a Professor of Computer Engineering at the University of Cagliari, Italy, and Director of the Pattern Recognition and Applications laboratory that he founded from scratch in 1995 and it is now a world-class research lab with 30 staff members, including five tenured Faculty members. He is the R&D manager of the company Pluribus One that he co-founded. He has been doing research on the design of pattern recognition systems for 30 years. Prof. Roli has published 81 journal articles and more than 250 conference articles on pattern recognition and machine learning and many of his papers are frequently cited. His current h-index is 56 according to Google Scholar (April 2018), and his papers collected 11,007 citations. He has been appointed Fellow of the IEEE and Fellow of the IAPR. He was the President of the Italian Group of Researchers in Pattern Recognition and the Chairman of the IAPR Technical Committee on Statistical Techniques in Pattern Recognition. He was a member of the NATO advisory panel for Information and Communications Security, NATO Science for Peace and Security (2008 – 2011). Prof. Roli is one of the pioneers of the use of pattern recognition and machine learning for computer security. He is often invited to give keynote speeches and tutorials on adversarial machine learning and data-driven technologies for security applications. He is (or has been) the PI of dozens of R&D projects, including the leading European projects on Security & Privacy CyberRoad and ILLBuster.

Fabio Roli is a Professor of Computer Engineering at the University of Cagliari, Italy, and Director of the Pattern Recognition and Applications laboratory that he founded from scratch in 1995 and it is now a world-class research lab with 30 staff members, including five tenured Faculty members. He is the R&D manager of the company Pluribus One that he co-founded. He has been doing research on the design of pattern recognition systems for 30 years. Prof. Roli has published 81 journal articles and more than 250 conference articles on pattern recognition and machine learning and many of his papers are frequently cited. His current h-index is 56 according to Google Scholar (April 2018), and his papers collected 11,007 citations. He has been appointed Fellow of the IEEE and Fellow of the IAPR. He was the President of the Italian Group of Researchers in Pattern Recognition and the Chairman of the IAPR Technical Committee on Statistical Techniques in Pattern Recognition. He was a member of the NATO advisory panel for Information and Communications Security, NATO Science for Peace and Security (2008 – 2011). Prof. Roli is one of the pioneers of the use of pattern recognition and machine learning for computer security. He is often invited to give keynote speeches and tutorials on adversarial machine learning and data-driven technologies for security applications. He is (or has been) the PI of dozens of R&D projects, including the leading European projects on Security & Privacy CyberRoad and ILLBuster.